I used 'ai' to produce this summary about an llm task with 'zero errors'

Sorry, not sorry

Paper: arxiv. org/pdf/2511.09030

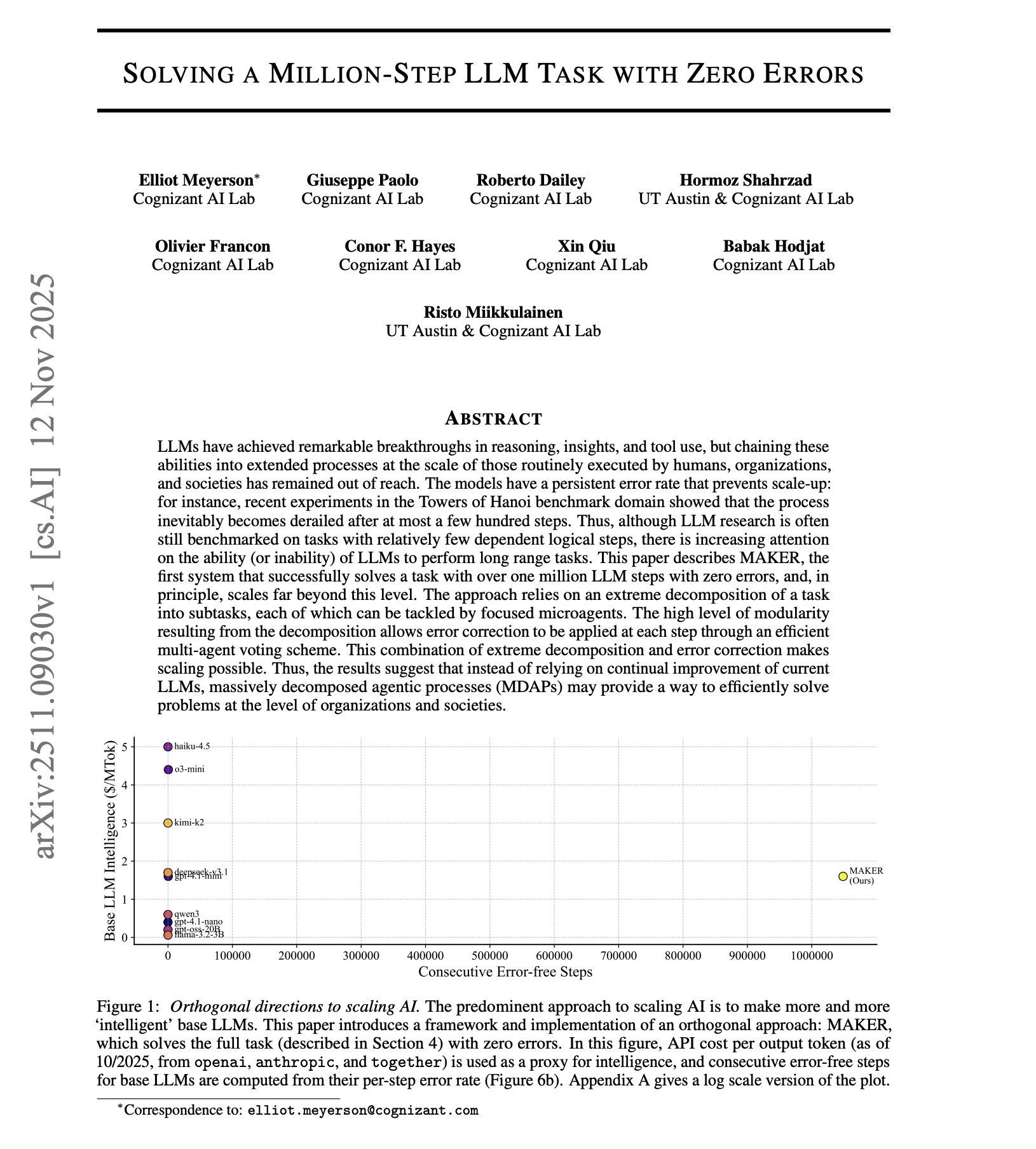

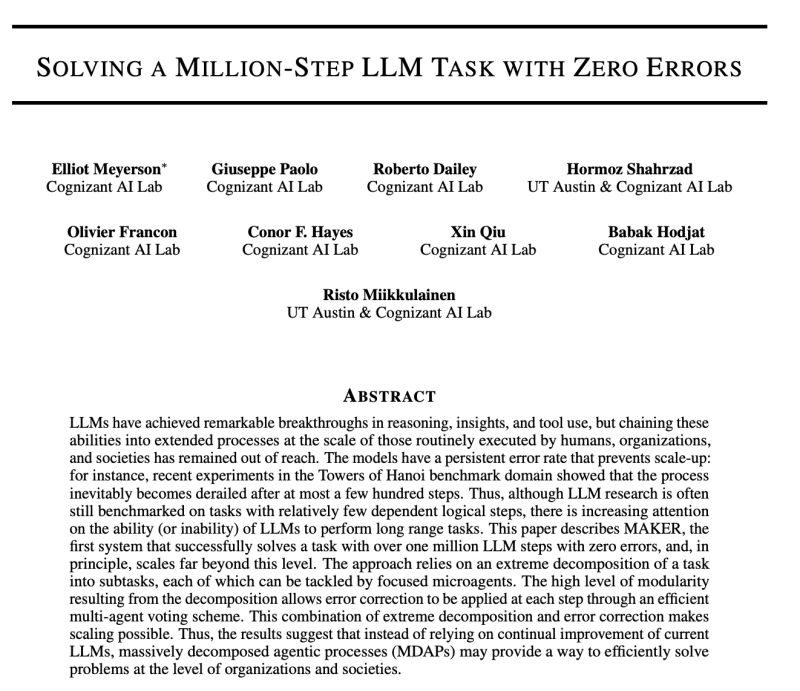

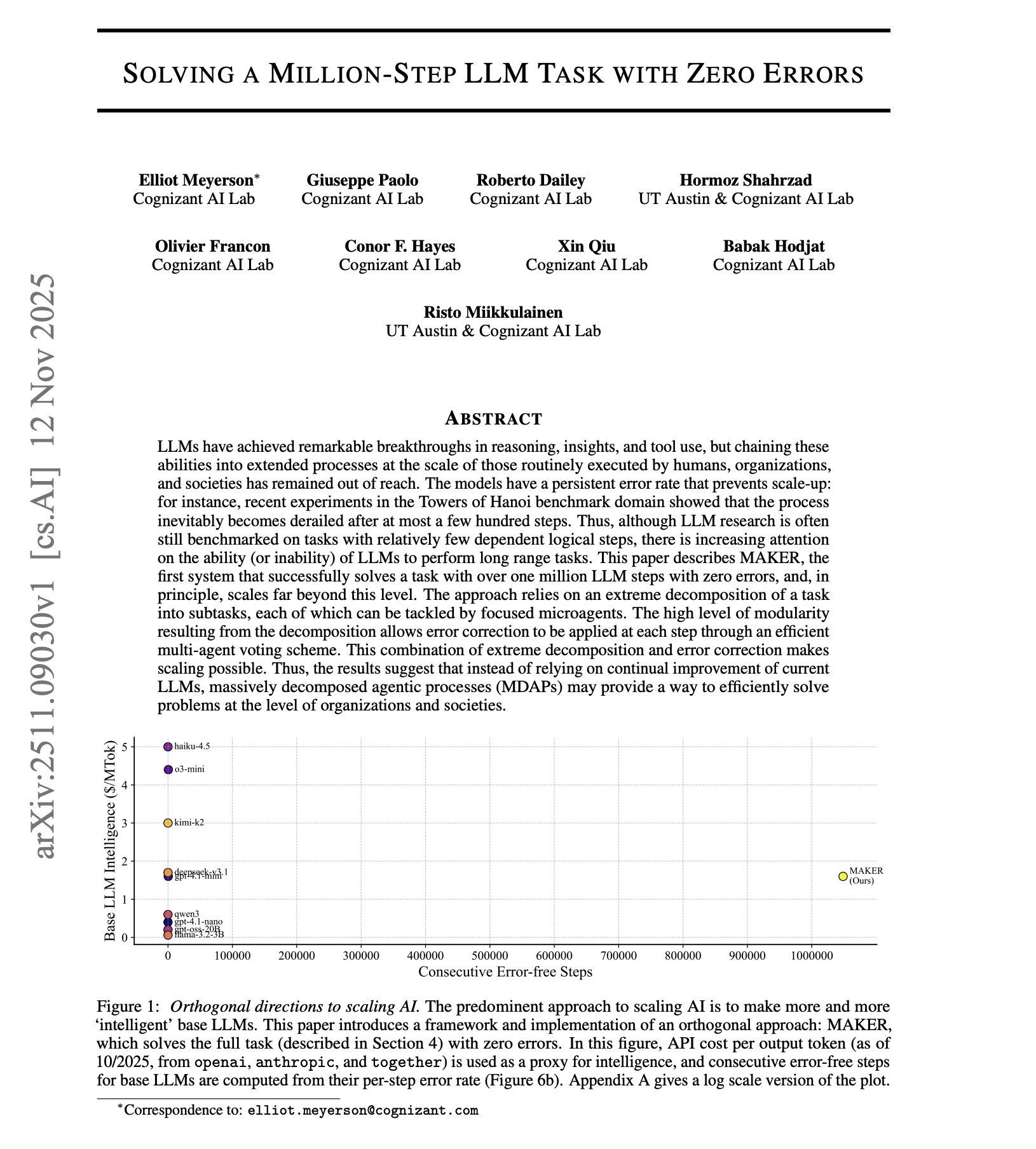

The paper "Solving a Million-Step LLM Task with Zero Errors" by Elliot Meyerson et al., on arXiv (arXiv:2511.09030), presents a framework called MAKER for enabling large language models (LLMs) to execute very long sequences of reasoning steps with zero errors. It addresses the fundamental challenge that LLMs have an inherent error rate that makes completing millions of dependent steps without failure nearly impossible when done naively.

Key elements of the approach include:

- Massively decomposing tasks into the smallest possible subtasks to minimize errors.

- Employing error correction and "red-flagging" invalid outputs to discard potentially erroneous reasoning steps.

- Using a voting scheme called "first-to-ahead-by-k" to ensure the correctness of each step through multiple sampled outputs.

- Applying this strategy specifically to the Towers of Hanoi problem with 20 disks, which requires over one million steps, and successfully completing the task with zero errors.

The results demonstrate that scaling LLM-based systems to extremely long tasks is feasible by combining extreme decomposition and error correction, which contrasts with relying solely on continual LLM improvements. MAKER also suggests future research directions for automating decomposition and handling various types of steps and error correlations.

In summary, this work marks a breakthrough in achieving error-free long-horizon sequential reasoning with LLMs by architecting an ensemble-based, massively decomposed process, making it viable for safety-critical or large-scale AI applications [1][2].

Citations:

[1] Solving a Million-Step LLM Task with Zero Errors

arXiv.org

Solving a Million-Step LLM Task with Zero Errors

LLMs have achieved remarkable breakthroughs in reasoning, insights, and tool use, but chaining these abilities into extended processes at the scale...

[2] Solving a Million-Step LLM Task with Zero Errors

Solving a Million-Step LLM Task with Zero Errors

[3] computational costs increase with ensemble size and error ...

X (formerly Twitter)

elvis (@omarsar0) on X

This new research paper claims to complete million-step LLM tasks with zero errors.

Huge for improving reliable long-chain AI reasoning.

Worth ch...

[4] Solving a Million-Step LLM Task with Zero Errors

Paper page - Solving a Million-Step LLM Task with Zero Errors

Join the discussion on this paper page

[5] Cognizant Introduces MAKER: Achieving Million-Step, Zero ...

https://www.reddit.com/r/mlscaling/comments/1owcnsn/cognizant_introduces_maker_achieving_millionstep/

[6] New paper on breaking down AI tasks into tiny steps for ...

New paper on breaking down AI tasks into tiny steps for zero errors | Sneha Bhapkar posted on the topic | LinkedIn

New research on solving a million-step AI task with zero errors

A new paper, “Solving a Million-Step LLM Task with Zero Errors,” shows a metho...

[7] Future plans to integrate MAKER/MDAP abstractions?

LangChain Forum

Future plans to integrate MAKER/MDAP abstractions?

The recent paper “Solving A Million-Step LLM Task with Zero Errors” (https://arxiv.org/pdf/2511.09030) proposes a new and simple agentic archit...

[8] MAKER Achieves Million- Step, Zero-Error LLM Reasoning

Deep learning | **Shattering the Illusion: MAKER Achieves Million-Step, Zero-Error LLM Reasoning | The paper is demonstrating the million-step stability required for ... | Facebook

**Shattering the Illusion: MAKER Achieves Million-Step, Zero-Error LLM Reasoning | The paper is demonstrating the million-step stability required f...

[9] [PDF] PyTorch: An Imperative Style, High-Performance Deep Learning Library | Semantic Scholar

https://www.semanticscholar.org/paper/PyTorch:-An-Imperative-Style,-High-Performance-Deep-Paszke-Gross/3c8a456509e6c0805354bd40a35e3f2dbf8069b1

[10] [PDF] Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training | Semantic Scholar

https://www.semanticscholar.org/paper/Sleeper-Agents:-Training-Deceptive-LLMs-that-Safety-Hubinger-Denison/9363e8e1fe2be2a13b4d6f5fc61bbaed14ab9a23