10 major VPN companies are run the people of same group.

so think about #privacy again when paying for it.

**Bias and Fairness**: These models can inherit biases present in the training data, which may lead to unfair or inaccurate results in certain scenarios. #FACTSTR

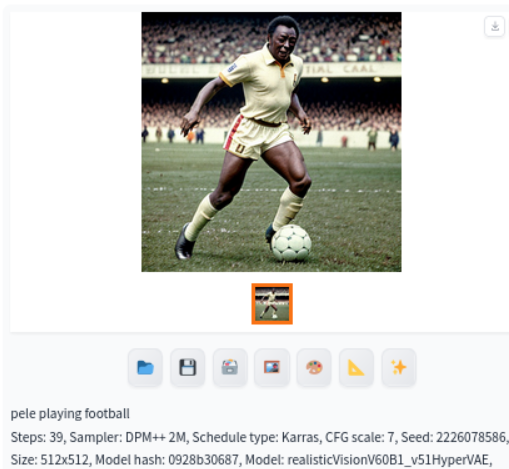

#pele just with near default settings

#pele just with near default settings

am sure better n more power start real dev are doing on field - well it's a start after all.

am sure better n more power start real dev are doing on field - well it's a start after all.