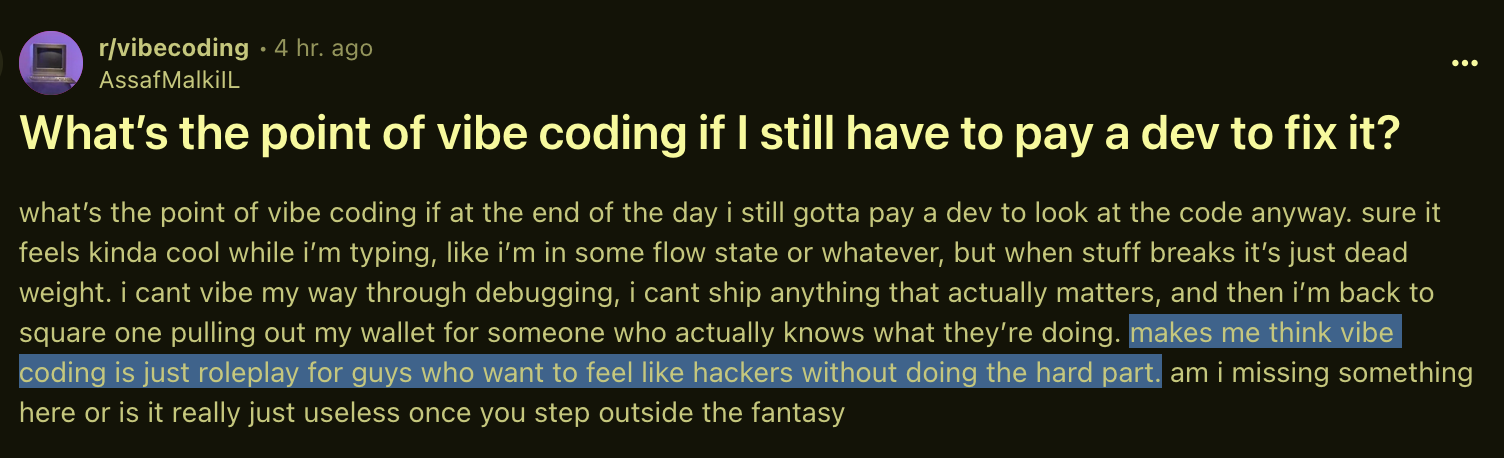

I think the Vibecoding reddit has accidentally stumbled on the best description of vibecoding:

It's "roleplay for guys [it is always guys] who want to feel like hackers without doing the hard part".

(Source: https://www.reddit.com/r/vibecoding/comments/1mu6t8z/whats_the_point_of_vibe_coding_if_i_still_have_to/ )

#ai #vibecoding