You have to spell it out as "GPUs" or else the gamers in the office get excited

Okay Web has a temporary fix. The permanent fix will cost extra.

RE:  View quoted note →

View quoted note →

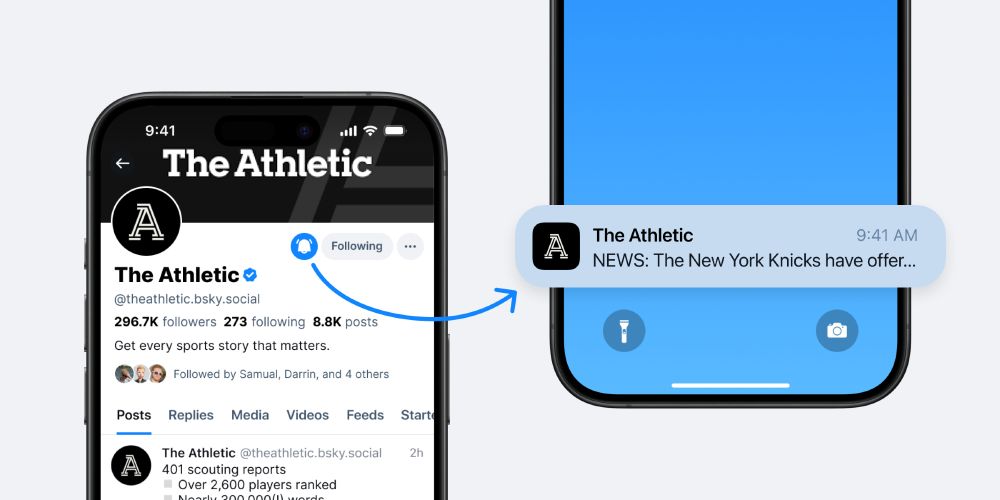

Bluesky Social

Paul Frazee (@pfrazee.com)

We're debugging a performance issue with the web app. If it starts dragging, just refresh the page